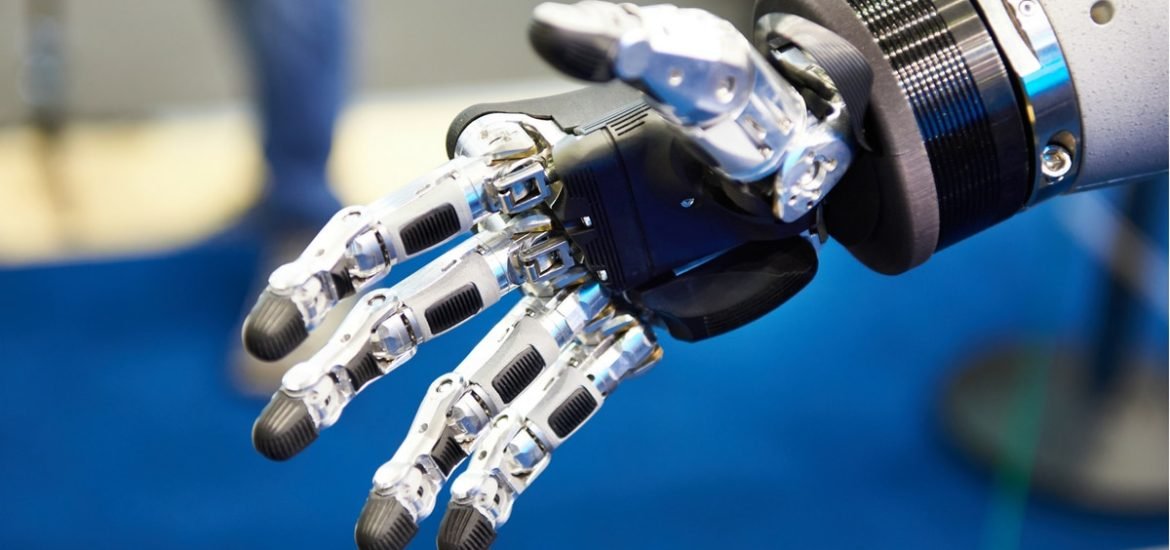

A new robotic hand with improved dexterity was just unveiled on July 30th that has taught itself to grab objects the same way a human does. This newest feat by OpenAI, a non-profit artificial intelligence (AI) research company funded by Elon Musk, was achieved with the help of machine learning, a form of AI that uses cleverly programmed algorithm allowing machines to learn new skills on their own. For the study, researchers used the “humaniform” Shadow Dexterous Hand, created by the Shadow Robot Company based in London, UK.

The new robotic system, known as Dactyl, learns from scratch based on a neural network and using something called reinforcement learning (RL). Based on a scoring system, this type of training “rewards” the robot each time it accomplishes a task correctly and “punishes” it for foolish actions. Artificial learning can be a lengthy process since it is essentially a trial and error method and robots are breakable; therefore simulations were first performed in a virtual environment and physical testing was carried out after to check whether the training could actually be transferred over to the real world.

Most of the work was performed using a child’s building block with letters on each side. In both the virtual and physical tests, the block was placed in the palm of the hand and Dactyl was instructed to reposition it into different orientations, for example, place the side with a “B” on it facing down and the side with the “N” on it facing up. This is the first time a robotic hand has ever accomplished such complicated tasks.

Researchers often employ virtual training methods, however, virtual models usually don’t transfer well into reality. This time was different, however. So how were these researchers able to overcome the “reality gap”? To surpass the limitations of virtual learning, the team at used a method which they refer to as “domain randomization.” Basically, random elements are added into each simulation, for example, changing the weight or size of the block, thereby providing the robot with experiences to learn from rather than human input derived from the real world.

According to the report published by Open AI, “by learning in simulation, we can gather more experience quickly by scaling up, and by de-emphasizing realism, we can tackle problems that simulators can only model approximately.” Which roughly translates to simulations usually model the real world approximately, however, by not relying totally on real-world physics and enormous quantities of human input, this new method allows the robot to more quickly tackle increasingly complex problems.

So far, the system can perform around fifteen manipulations on average without encountering problems such as dropping the block and has even discovered some human tricks like availing of gravity to reorient the block and spinning the cube with only two fingers. This is a huge step toward improving the integration of robots and machine learning into real-world applications. It will be exciting to see what comes next.